Top tech companies have been seeing so much business value being generated from ML that many of them went AI first years ago. But many smaller companies are still struggling to replicate similar gains in their own businesses. They hire great ML engineers / data scientists who painstakingly clean/obtain a great labeled dataset and train a great model using state of the art modeling techniques. They even see phenomenal model performance during the training but still the model doesn’t perform well in production and business metrics don’t improve as much despite months of investment.

One of the leading (but certainly not the only) cause behind this is what is called as “information leakage” — the phenomena where the model at the training time gets access to some information which it should not have. This leakage of information makes the model appear way better than it actually is and in fact makes the model worse than it would be without this leaked information. In this post, we will look at two of the most common types of information leakage in real world ML systems — one of these is commonly understood but the other one is more subtle. We will also look at the best practices for eliminating both kinds of leakage.

1. Leakage from train dataset to test dataset

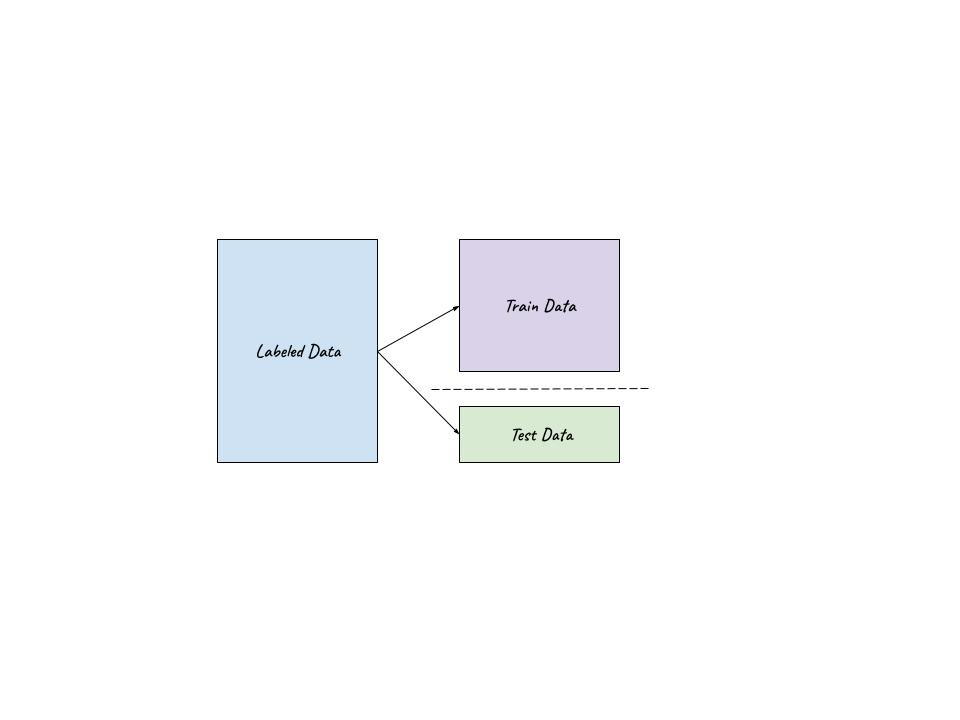

Let’s imagine you’re building a personalized recommendation engine for an e-commerce product. As a part of this, you decide to train a model that predicts the probability of a user buying a particular product. So you create a dataset of all the products that were shown to the user. And if the product was eventually bought by the user, it is given a label of 1 else 0. Further, you randomly divide this dataset into two parts — one for training and one for testing. You train your model only on the train dataset, record its performance, and then run it again on the test dataset to verify that the model can replicate the training performance. If the performance is good and replicates across train/test sets, you ship it in the production (or maybe as an A/B test). After all, it must mean that the model is robust and generalizes well to users/products it hasn’t seen before. Right?

Wrong!

Let’s zoom in on a particular user session in which the user clicked on a product (say A), added it to cart, was shown another related product after adding it to cart (let’s call this one B) and ended up buying both A and B. Now imagine that we randomly split our dataset in a way that example of user viewing product B went to the training dataset and the example of user viewing product A went to the test dataset.

Since B was shown only when user had already put A in the cart, and B was present in the training dataset, the information about user having expressed interest in A was implicitly present in the training dataset — but since A itself is in test dataset, information is leaking from the test dataset to the train dataset. This is not great because model could utilize this information to show a great performance on the test dataset by just cheating without learning anything useful. This is the information leakage problem and causes model to rely on the leaked information & cheating instead of learning real signals.

Another form of information leakage of this type is when some examples about an object (e.g. a user or a video) are present in the training data and some other examples are present in the test data. This may allow the model to learn things from the training dataset about that object and use them in test data without generalization.

In general, information leaks like this happen more often than many people realize and they need to be minimized, if not eliminated in order to get a good production performance.

Solutions

There is no one size fits all technique to fix information leakage like this. Application and the context determine the kind of information that can be safely shared across train/test datasets without polluting the models.

That said, there is one very simple solution that is relatively broad in its scope of application — splitting the train and test data simply by timestamp such that all the test examples occur after the train examples. This way, the information about what happens in the test data doesn’t leak into the train data.

This way of splitting train/test dataset obviously makes sense only in those problems that have a natural notion of time ordering. For instance, many problems where a user is interacting with an app/website fall in this category (e.g. ranking/recommendation problems or house prices on a product like Zillow). But on the other hand, it might not work for many NLP/computer vision problems.

2. Leakage from future via incorrect features

Imagine you’re building a notification recommendation engine for a news product that sends out notification to a user when a super relevant news story shows up. At any point of time, a few hundred candidate news stories are available and at most one notification can be sent out. So you train a model that assigns a score to each candidate and sends out the top scoring news story as a notification.

Now you want to improve this model by trying out a new modeling technique. For that, like any other ML model, you need to generate a training dataset, which is comprised of all the examples with the correct label present. So you take all the stories that were sent out as notification in the last month along with whether the user opened them or not (the label in this case) and compute all your production ML features for all these stories. This becomes the training data and you can now try out your new shiny modeling technique on it. Right?

Wrong!

Let’s zoom in on a particular story that was sent 11 days ago. When the model made a decision to send this story out, it only had access to the information until that moment in time, which was 11 days ago. But when we compute all the features at the train time (which is now), we end up including information that wasn’t known back then. For instance, maybe there is a feature for - “does the user follow the news source”. The value of this feature for a particular user/notification was “false” 11 days ago but after user saw the notification, they liked it so much that they started following the source. Now when we compute the features to get the training data, this feature will be computed to be “true”. Our new model may be fooled and learn that the presence of this feature is a good indicator of user’s propensity to open the notification but it might just learn to rely on information that is not available at the prediction time.

This information leakage is present in all types of machine learning. Some domains (e.g. computer vision or NLP) that rely on relatively static features (e.g. values of pixel at certain positions) are less affected. But any sort of personalization system primarily depends on user engagement features (e.g. CTR of user on some kind of things) which change every minute with every user interaction. Hence information leakage is a major problem in real world ranking and recommendation systems.

Solutions

The desired outcome is quite clear — for that particular notification that was sent 11 days ago, we need to know what the value of every feature would have been at that moment in time. There are two ways of accomplishing that:

- Logging the features — whenever a prediction is made, all the features available are computed and logged then and there. And all future model training is only allowed to use previously logged features (or static features which are known to not change over time). This technique completely eliminates the information leakage and is simple to implement. But its main downside is that trying out new feature ideas becomes slower because first data of any new feature needs to be logged for some number of days.

- Reconstructing the features — some sort of time versioned history of all the data is maintained and feature values at a point in past are recomputed for each moment in time when any notification was sent. This, as you can imagine, requires versioned history for every piece of data that might be needed to reconstruct the feature, which requires a lot of storage and entails lot of complexity. Needless to say, this is a very complex technique (here is Netflix’s architecture from 2016). And it obviously doesn’t work when features need to be written using data source that is new and doesn’t have a fully versioned history.

Relationship between two kinds of information leakage

In a manner of speaking, both kinds of information leaks are similar - they import some knowledge from “future” into the training process. Where they differ is their cause — the former is due to non-ideal split of train/test dataset (and so information leakage can happen via labels too) and makes the model look better on test set. The latter is due to incorrect feature values (and so for the same labels, information leakage happens due to features). These both are independent and so it’s possible that only one of these is happening in your system and not the other. It also means that both of them may be present simultaneously, which is even worse.

Conclusions

- Due to the presence of time sensitive engagement features, information leakage is a much bigger problem in personalization tasks (like recommendations or fraud detection) compared to other domains with relatively static features like vision/NLP. It’s important to incorporate this (and many other similar nuances) in the architecture of the underlying ML infrastructure.

- If your models perform really well in an offline training (e.g. have high AUCs or NEs) but don’t do that well in production, you may have an information leakage problem. Given how fundamental this problem is, fixing it is high ROI and should be done before any other non-trivial investment in model training itself.